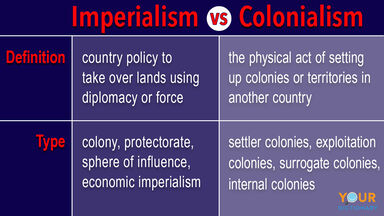

Cultural-imperialism Definition

noun

The practice of imposing the culture of one country on another, especially if the latter is being invaded by the former.

Wiktionary

Related Articles

Find Similar Words

Find similar words to cultural-imperialism using the buttons below.